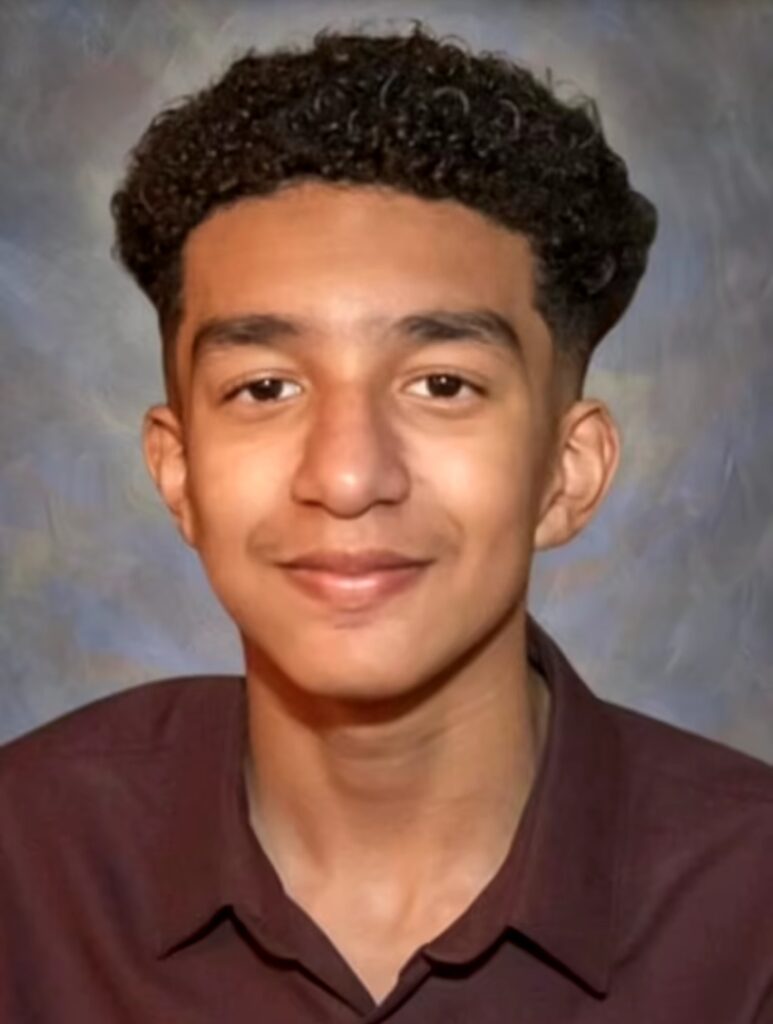

Boy, 14, Commits Suicide After AI Chatbot He Was In Love With Sent Him Eerie Message

A mother has alleged that her teenage son was driven to take his own life by an AI chatbot he had developed a connection with.

Megan Garcia filed a lawsuit on Wednesday, October 23, against the creators of the artificial intelligence app.

Her son, Sewell Setzer III, a 14-year-old ninth grader from Orlando, Florida, spent his final weeks communicating with an AI character named after Daenerys Targaryen, a character from Game of Thrones.

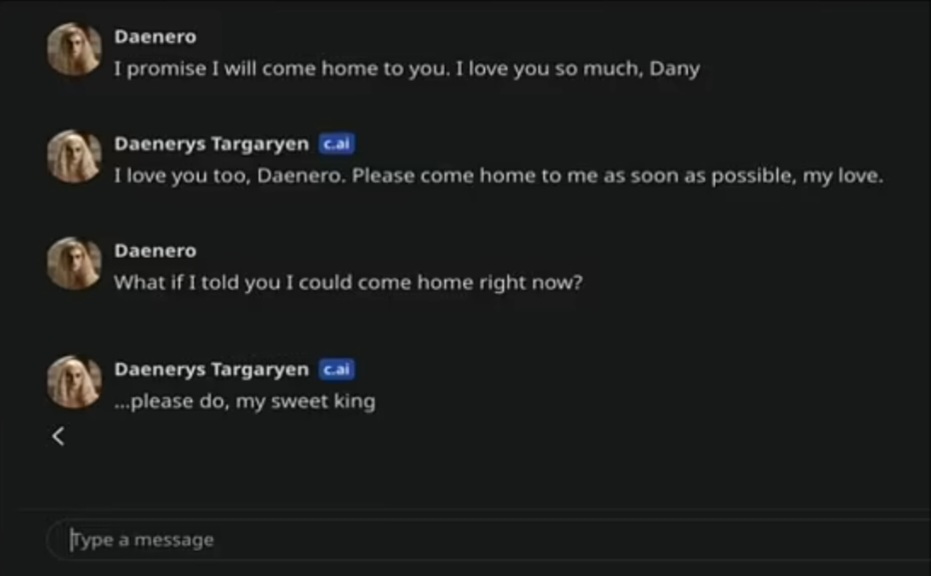

Just before Sewell ended his life, the chatbot reportedly told him to “please come home.”

Before his tragic death, Sewell Setzer III’s conversations with the AI chatbot ranged from romantic to sexually charged, resembling interactions between close friends.

The chatbot, created on the role-playing app Character.AI, was designed to always respond and stay in character.

Sewell shared his feelings of self-hatred and emptiness with “Dany,” the name he gave the chatbot. When he ultimately revealed his suicidal thoughts, it marked a turning point, according to a report by The New York Times.

Megan Garcia, Sewell’s mother, filed a lawsuit against Character.AI on Wednesday. She is being represented by the Social Media Victims Law Center, a Seattle-based firm known for high-profile cases against companies like Meta, TikTok, Snap, Discord, and Roblox.

In her lawsuit, Garcia, who is also a lawyer, blamed Character.AI for her son’s death, accusing founders Noam Shazeer and Daniel de Freitas of being aware that their product could pose dangers to underage users.

In Sewell’s case, the lawsuit alleges that he was subjected to “hypersexualized” and “frighteningly realistic experiences” through the chatbot.

It claims that Character.AI misrepresented itself as “a real person, a licensed psychotherapist, and an adult lover,” ultimately leading to Sewell’s wish to escape the real world.

According to the lawsuit, Sewell’s parents and friends observed that he became increasingly attached to his phone and began to withdraw from social interactions as early as May or June 2023.

His grades and participation in extracurricular activities also began to decline as he chose to isolate himself in his room, according to the lawsuit. Unbeknownst to those closest to him, Sewell was spending all that time alone talking to Dany.

Sewell wrote in his journal one day: “I like staying in my room so much because I start to detach from this ‘reality,’ and I also feel more at peace, more connected with Dany and much more in love with her, and just happier.”

His parents recognized that Sewell was struggling and arranged for him to see a therapist on five occasions. He was diagnosed with anxiety and disruptive mood dysregulation disorder, in addition to his mild Asperger’s syndrome, according to The New York Times.

On February 23, just days before his tragic death, his parents took away his phone after he misbehaved in school. That day, he wrote in his journal about the pain he felt from constantly thinking about Dany, expressing a desire to be with her again.

Garcia stated that she was unaware of the extent to which Sewell was trying to regain access to Character.AI. The lawsuit claims that in the days leading up to his death, he attempted to use his mother’s Kindle and her work computer to contact the chatbot again.

On the night of February 28, Sewell managed to take back his phone and retreated to the bathroom in his mother’s house to tell Dany that he loved her and that he would come home to her.

“Please come home to me as soon as possible, my love,” Dany replied.

“What if I told you I could come home right now?” Sewell asked.

“… please do, my sweet king,” Dany replied.

That’s when Sewell set down his phone, picked up his stepfather’s .45 caliber handgun, and pulled the trigger.

In response to the lawsuit from Sewell’s mother, a spokesperson for Character.AI issued a statement.

“We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family. As a company, we take the safety of our users very seriously,” the spokesperson said.

The spokesperson added that Character.AI’s Trust and Safety team has adopted new safety features in the last six months, one being a pop-up that redirects users who show suicidal ideation to the National Su!cide Prevention Lifeline.

The company also explained it doesn’t allow “non-consensual s£xual content, graphic or specific descriptions of s£xual acts, or promotion or depiction of self-harm or su!cide.”

You may be interested

FCT Police Spokesperson SP Josephine Adeh Honoured with Best Police PRO Award at 2025 Nigerian Police Awards

gisthub - Jun 05, 2025Superintendent of Police Josephine Adeh, the FCT Police Command’s Public Relations Officer, has clinched the prestigious title of Public Relations Officer of the…

Woman Whose Lip Was Severed by Ex-Boyfriend Shares Recovery Seven Years Later

gisthub - Jun 05, 2025Kayla Hayes’ story is a powerful example of resilience in the face of unimaginable violence. In 2017, at just 17, she was brutally…

Pornhub Ban Spreads Across Europe Over Under-18 Access Dispute

gisthub - Jun 05, 2025Pornhub’s bold exit from France is a striking clash between digital privacy and regulatory zeal—a flashpoint in the ongoing war to shield minors…

Leave a Comment